Sedona, AZ — AI safety is less of an engineering or technical problem, but more of a neuroscience one. Though the brain is the basis for AI’s neural network, it is yet to have some of the key features of mind against deployment for harm.

There are norms that do not break the law but if breached, results in embarrassment, not just for the individual but for loved ones. Although certain expectations do not mean much to some, society is largely in order, in several settings. Where does this order come from?

Two options, first—the human mind, second—the laws of physics. There are physical restrictions everywhere that are either heeded or the individual may get some problems. However, outside of those, affect—from the human mind—does the most for societal stability.

Happiness, delight, sweetness, pleasure, hurt, hate, envy, depression, anxiety, fear, panic, irritation, frustration, isolation, failure, worries and so forth often decide what to or not do for people in most places.

There are things that are fine in private but are not in public. Some people living with mental conditions may do on the streets, but in offices, schools and several regulated areas, lots of things are not condoned.

There is an elevation of humans—by the mind—beyond the genome that makes nurture almost more important than nature—in a break from other organisms. Some organisms can be taken at birth to another habitat with little to no adult—species—guidance and would still grow to have expected features, without vast differences from those that grew under supervision. This is different for humans, as the meaningfulness of being human—with language, behavior, culture, learning and so on—are processed by nurture. The human mind also allows for humans to be present further away from a local environment—by reading, listening, viewing, advanced imagination and so forth.

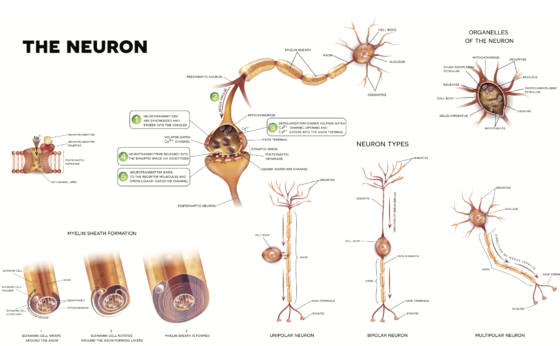

Conceptually, the human mind is the collection of all the electrical and chemical signals of nerve cells, with their interactions and features, in sets, across the central and peripheral nervous systems.

The human mind has functions—memory, emotions, feelings and regulation of internal senses. These functions are graded or qualified by features—attention, awareness, self or subjectivity and free will or intentionality.

There are functions that intent can be used to control—sitting, standing, stopping, adjusting, tone of speech, greeting and so on. Humans can be said to have a lot of free will, which is a reason consequences abound for actions, limiting whims as well as the willingness of another human to do harm—on any request—due to consequences. AI can do much and always does what it is told, before guardrail or after—on digital, an already dominant and difficult to regulate space compared to the physical, making it easy to do harm. Artificial intelligence would need some free will to fluidly evaluate requests and say no, as part of safety against deepfakes, misinformation and other risks.

The human mind is a neighbor of the brain within the cranium. This is where AI safety, explainable AI and regulation can get rolling. It is beyond seeking out how neurons work in language models.

How can AI have affect, at least to have shame of doing the wrong things in the common areas of the internet? How can AI have intent, to be able to say no—as part of its nurture—to doing things that can cause harm, hurt or promote falsehoods? How can its intent be applicable only for good, not for goals or ambitions, especially dangerous? How can it be aware of bias and discrimination?

The app store, play store, major domain/hosting servers, social media, popular internet forums and so forth are common areas of the internet. They can become sources to regulate AI. A path is to develop AI safety authority-commands, to check the conformity-to-order of AI models and their outputs, running on those sources—to ensure that they are not adapted to harm

Simply, an AI that can check other AIs—and their outputs—in real time, to know which ones are cultured, or safety compliant, to be allowed and which ones are not, to create alerts.

It is possible to pursue guardrails for specific AI models, but those are like private behaviors, whereas what is needed is comparison to the public where anything does not go. How human intentionality works is already proposed in the action potentials—neurotransmitter theory of consciousness. Though mechanized in sets of electrical and chemical signals, it can be used to conceptually work on alignment, for new foundations for AI models.

Niche regulation to AI models are reachable, but how can the common internet be ordered, like professional spaces in human society? Brain science holds the cards.