By David Stephen

Advances for AI safety are not currently a problem of evaluations or benchmarks for models, since new benchmarks alone are unlikely to solve the current problems of misinformation and deepfakes—images, audios and videos. There are several present risks with AI that new evaluation methods may do little or nothing to solve.

How is it possible to trace the AI source of some misinformation or voice cloning for deception? How can a post-guardrail AI model that produces a problematic output be penalized for its actions?

Already there are several benchmark and evaluation rankings for LLMs. While they measure certain criteria, they do not solve some current problems, nor do they provide a sure way to determine what is or when artificial general intelligence [AGI] or artificial superintelligence [ASI] may arrive.

There is a recent feature on WIRED, We’re Still Waiting for the Next Big Leap in AI, with the quotes, “Gauging the rate of progress in AI using conventional benchmarks like those touted by Anthropic for Claude can be misleading. AI developers are strongly incentivized to design their creations to score highly in these benchmarks, and the data used for these standardized tests can be swept into their training data. Benchmarks within the research community are riddled with data contamination, inconsistent rubrics and reporting, and unverified annotator expertise.”

Anthropic just announced, A new initiative for developing third-party model evaluations, stating that, “We’re introducing a new initiative to fund evaluations developed by third-party organizations that can effectively measure advanced capabilities in AI models. We are interested in sourcing three key areas of evaluation development: AI Safety Level assessments; Advanced capability and safety metrics; Infrastructure, tools, and methods for developing evaluations

How would this counter general misinformation? How would they prevent deepfake videos, audios and images, outside scrutinized areas like politics and elections? There are several AI tools in search results that make several misuses possible. How can there be a collective safety approach against some of their outputs?

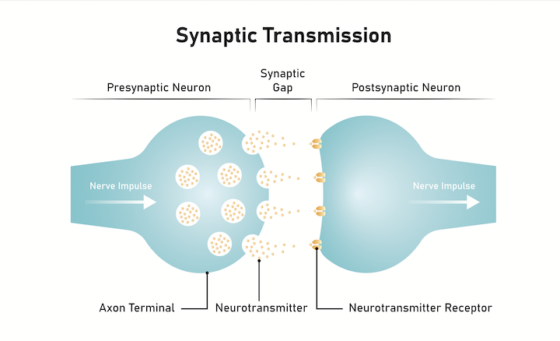

How can AGI or ASI be determined or measured with comparison to how human intelligence works? If human intelligence is based in the human mind, how does the human mind mechanize intelligence? AI already has access to lots of memory. It can make inferences about the world through language without self-experience. If it were the human mind, with access to resources on things, without experience, how does AI currently compare

There are several detection tools for AI outputs, with varying levels of accuracy, but knowing that something came by AI, may not matter if the thing is already used to cause harm. How can outputs around certain keywords be tracked, across AI outputs indexed on search engines, using web crawling and scraping?

If an AI model is misused, how can it begin to lose access to some of its parameters, as a consequence for its actions? There are directions that some AI safety and alignment research are going that may not be helpful for current risks—or existential risks. There are also benchmarks that are sought for AGI, without exploring the human mind.

The threats and risks of AI exceed the safety of individual frontier models. The capabilities of AI exceed its limitation to language. Approaching answers from extended angles would make a better case for the common purpose.