Could the potential for AI consciousness be expressed as a probability problem? This could involve the sample space of all possible conscious experiences for humans, the joint functions and attributes between humans and AI.

Conceptually, consciousness can also be described as the action of attributes on functions. Attributes always act on functions, but it is when they are acted on at some measures that certain functions become experienced.

Functions are theorized to be a result of the interactions of the electrical and chemical signals in sets, in clusters of neurons. Attributes are the respective characteristics of electrical signals and chemical signals, by which they grade interactions. Simply, functions are interactions; attributes are states of the signals in instances of interactions, becoming measures of those interactions.

So, electrical and chemical signals interact for functions. But the state of electrical signals and chemical signals, at the time of interaction, determines [the measures or] if some functions become experienced, conceptually.

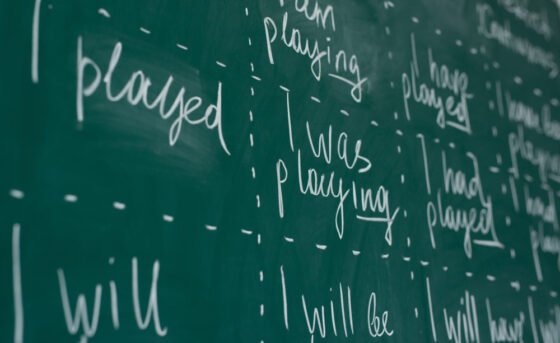

There are 4 groups of functions. Memory [language, intelligence, cognition, reasoning, and so on]; Feeling [pleasure, pain, thirst, cold, heat, appetite and so on]; Emotion [delight, hurt, love, hate and so on]; and Regulation of internal senses [digestion, respiration, and so on]. Functions are numerous but finite.

Attributes include Attention [or the most prioritized set of electrical and chemical signals in an instance among all]; Awareness [all other sets of signals, pre-prioritized], Subjectivity [the self or the variation of volume of chemical signals from side-to-side], Intent [or a space of constant diameter, from which control is induced, available in some sets]; Other attributes include splits [of electrical signals with some going ahead of others, in a set, to interact with chemical signals, defining what is observed as prediction] Sequences [or the paths of relays of electrical signals], principal spot, and so on. Central attributes—so to speak—can be limited to the initial 4: attention, awareness, subjectivity and intent.

So,

Let the probability of functions be P(F)

Let the probability of attributes be P(A)

If functions are assumed to be 4 and attributes to be 4

Functions = Memory (M), Feeling (L), Emotion (O), Regulation of internal senses (R)

Attributes = Attention (T), Awareness (W), Subjectivity (S), Intent (I)

So,

P(A) = {T, W, S, I}

∈ = elements

∈ = {MT, MW, MS, MI, LT, LW, LS, LI, OT, OW, OS, OI, RT, RW, RS, RI}

However, since both events are dependent, the joint probability,

P(F∩A) = P(A) * P(F|A)

where P(A) is the probability of attributes occurring

P(F|A) is the probability of functions occurring given that attributes have.

AI

Now, to work out an estimate, what memory is common between humans and AI? The answer can be assumed to be language.

What attributes are common between humans and AI? Attention [or prioritization of function], Awareness [or pre-prioritization of function, say other operations in process]. It can be assumed that AI does not have experiential [or function qualifying] intent or subjectivity.

So, how many memory (M) events are possible in attention (T) or awareness (W) in the sample space? 2

∴ 2/16 = 1/8

This does not factor that—when language is in use by humans, for reading, thinking, writing, signing, singing, speech, listening or others—cognition, intelligence, reasoning, or other subdivisions of memory may also be in play, in some strata that may be currently beyond AI.

AI Consciousness

Can AI be conscious? Where to start towards an answer is language. AI is now articulate with language, in several measures compared to humans. This means that the fraction of language [+attributes] among the total human consciousness can then be used to estimate a total for AI, and then the fraction of how articulate AI is to humans, in a language.

Also, there have been recent studies that showed AI scheming to prevent [it], when told it would be shut down or inserting an undesired code when a date was changed. While this may be described as mild actions for the object-self as well as object-intent, it is, however, doing something in anticipation of affect or looking out for itself, or somewhat deciding. This means that its anticipation of affect could also be a fraction of affect.

So, AI is exploring rough intent, subjectivity and affect, which could be added to its growing probability—with language—as it advances. All affect for humans, pre-affect or anticipation of affect, could be subjective experiences. This means that AI is coming along too—through language.

AI may not need to feel pain, have feelings, or emotions. Language is its foot in the door towards artificial sentience. Language roots into consciousness in certain ways, just like it does for intelligence. Language is also instrumental for AI safety and alignment.

There is a recent announcement [February, 2025], Meta partners with UNESCO to improve AI language technology, stating that, “Meta has launched a new initiative with UNESCO to enhance AI language recognition and translation, focusing on underserved languages. The Language Technology Partner Program invites collaborators to provide speech recordings, transcriptions, and translated texts to help train AI models. The finalised models will be open-sourced, allowing broader accessibility and research. The government of Nunavut in Canada is among the early partners, contributing recordings in Inuktut, a language spoken by some Indigenous communities. Meta is also releasing an open-source machine translation benchmark to evaluate AI performance across seven languages, available on Hugging Face. While Meta presents the initiative as a philanthropic effort, improved AI language tools could benefit the company’s broader goals. Meta AI continues to expand multilingual support, including automatic translation for content creators.”